Anyone who has delved into the world of clustering (one of the main types of unsupervised learning) will have encountered – knowingly or unknowingly – the idea of ‘natural kinds’. The concept of natural kinds has a long history behind it. The idea is that objects in the world can be grouped into objective categories, which capture the fundamental essence of different types of objects and allow individual objects to be logically and usefully grouped into these fundamental categories.

To put this more concretely, the idea is that there is some essence that objects possess, that naturally makes them one type or another – an essence of ‘tree-ness’ that all trees posses, and which makes all trees trees and, similarly, an essence of ‘chair-ness’ that makes all chairs chairs. Any of the more superficial properties of trees or chairs are simply derived from, are a reflection of, or are at least consistent with, this fundamental essence.

Although this explanation might make natural kinds seem a bit mystical, it’s arguable that the concept of natural kinds is essential to science. To do science, we assume there are non-subjective types of objects in the world – electrons, molecules, living organisms – that we can investigate and describe with universal laws. And one of the goals of science is to discover the natural kinds that we can use in creating universal laws. There is also some evidence that humans are hard-wired to think of the world in terms of natural kinds . Even very young children seem to intuitively group objects into certain categories (e.g. animate vs in-animate) depending on the observed behaviours of these objects.

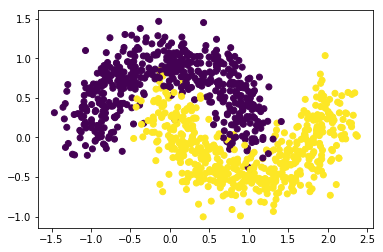

Trying to cluster data points (and by extension the objects represented by these data points) using clustering algorithms brings many of our assumptions about natural kinds and object similarity to the surface, and forces us to examine them more closely. In this context we can ask, will a given clustering algorithm help us to uncover the existence of hitherto unknown natural kinds hiding in our data? If yes, how can we tell, and what would it mean if there were?

I have discussed clustering with a fair number of people, and often the concept of superficial vs fundamental properties (and by extension similarities) comes up. The colour of a chair seems irrelevant to it being a chair, while the shape of the chair seems more fundamental, or essential. The fact that, in general, clustering algorithms consider the colour of two chairs as important as their shape in determining their similarity would seem to suggest a flaw in the idea of clustering as implemented by these algorithms.

This may be mis-leading, however, because our biases can also lead us astray. Since I’m writing this article at a cottage, let’s consider a cottage example. If I compare the plastic tablecloth on the kitchen table with the canoe sitting outside the door, I can see that they are oddly, if seemingly superficially, similar. Both are yellow on one side and white on the other. They have a similar magnitude of dimensions. In a pinch, you could use both of them to carry water, by bunching up the table cloth or filling the interior of the canoe. But essentially, we might want to say they are quite different – one is a type of boat, with more essential similarities to barges and ocean liners, and one is type of table linen, with more essential similarities to napkins and placemats. The fact that a clustering algorithm would say they are very similar would seem to be a mistake.

On the other hand, perhaps the algorithm is pointing out something new and useful. In addition to all of their similarities, an important difference between the plastic table cloth and the canoe is that one is rigid and one is not. But it would seem that, if we had a way to make the plastic table cloth as rigid as the canoe, perhaps by putting it over a light wooden frame, it could, in fact, operate in a similar fashion, much in the way a birch bark canoe functions. And perhaps we could reconceptualize the canoe in reverse fashion, and use it as a place to put a meal. In this way, realizing that these two different objects have more similarities than we typically might notice can lead us to think of them in new and useful ways.

So how should we approach clustering when thinking about natural kinds? Since clustering is unsupervised learning, it is often useful in the context of hypothesis discovery. For example, while carrying out scientific research we might use clustering results in conjunction with domain specific knowledge to develop new hypotheses about natural kinds in that particular domain. The robustness of these hypothetical natural kinds could then be investigated further, using experimental techniques.

Consider, for example, the food sciences paper ‘Using texture properties for clustering butter cake from various ratios of ingredient combination’ (Suworanee, 2018). In this project, 27 experimental butter cakes (for which the recipes were known) and 12 commercial cakes were clustered based on a number of cake properties (e.g. springiness, cohesiveness) and four main clusters were found. Suworanee used these cluster results, along with domain knowledge, to hypothesize that these clusters of cake properties were a result of the differing structures of air cells within the cakes, which themselves were hypothesized to be a result of the varying ratios of ingredients used to make the cakes. Suworanee went on to investigate these hypothesis by looking at the air cell structure of the different cake types under a microscope, and then further relating these results to the ingredient ratios in the experimental cakes.

Since cake is something many of us have some familiarity with, we can perhaps appreciate how Suworanee used clustering results along with domain specific knowledge to develop a hypothesis about underlying structures or mechanisms that could be responsible for the development of these clusters of cake properties. By extension, we can perhaps also see how this approach might be used to interpret and make use of clustering results in other domains. We might use the clustering results in combination with our existing knowledge to develop hypotheses about more fundamental properties or mechanisms that have led to these clusters of properties, and then investigate our hypotheses about these underlying mechanisms further.

This is a good start, but there can be other issues relating to our assumptions about how clustering algorithms work. For example, when interpreting clustering results, we may assume that the resulting clusters are based upon the same similarities, so to speak, across all of the clusters, which is not necessarily the case. As well, it isn’t always clear how generalizable clustering results are. I’ll follow up on these two possible issues in future blog articles. For now, my suggestion is to always keep in mind the goals of a clustering project and proceed accordingly. As I hope to have illustrated here, if the main goal is the discovery of natural kinds, interpretation of the clustering results may only be the first step in a multi-step project.